Are We Really Stuck with One Socket, One Port?

How eBPF sk_lookup Allows Dynamic Traffic Redirection

Most UNIX programming text books as well as practices hold the following statements to be true:

One socket could be opened by one and ONLY one process

One socket could listen/serve on one and ONLY one port

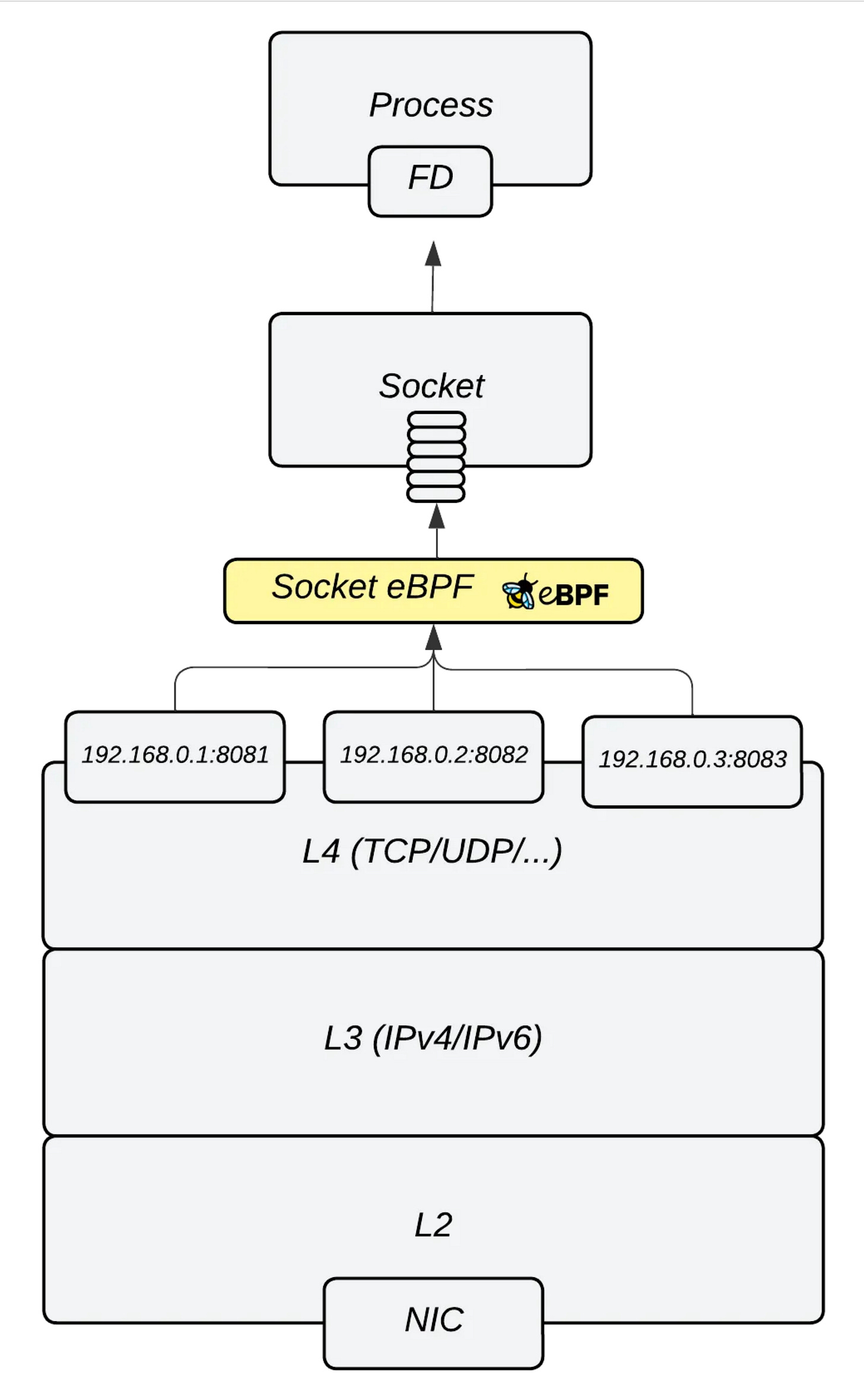

However, eBPF sk_lookup (introduced in Kernel 5.9+) overcomes these limitations, enabling redirection of any IP-port pair's traffic to a chosen listening socket.

The Problem

There are setup scenarios where binding sockets to multiple addresses or ports with bind() system call is not practical, such as:

receiving connections on multiple IP addresses, e.g. 192.0.2.0/24.

bind()only allows for single IPs, or you have to set it to INADRR_ANY (0.0.0.0) which is not desirable if another application should answer on a different range of IPshandling connections on all ports (e.g., for a TCP proxy like Cloudflare Spectrum). With

bind(), you must specify a specific port or set of ports to listen on. If you need to listen to any port for a particular IP,bind()requires multiple sockets, each bound to a specific port, leading to resource consumption and potential latency spikes during socket lookup.

Socket lookup latency spikes?

In the kernel, there’s a listening hash table (LHTABLE) that maps incoming network packets to the appropriate listening socket. While this mapping process might sound straightforward, it can incur a performance cost.

The hash table functions as a key-value store where each key is computed as the destination port number modulo the hash table size (which is 32 by default). For example:

16725 % 32 = 21

53 % 32 = 21

63925 % 32 = 21These destination ports all map to key 21. However, they don’t override each other’s value under that key, like you might expect; instead, each value of the key is a linked list of listening sockets.

This means that whenever a packet arrives at the LHTABLE, the hash table key is determined by the destination port number. Then, the linked list under this key is traversed until the IP address matches an listening socket entry.

This traversal can be quite slow if the linked list is long, which happens with a large number of listening sockets.

For instance, having 65,000 listening sockets (one for each port) in a PORT_ANY setup, this results in about two thousand items per LHTABLE key:

65,000 / 32 keys ≈ 2,000The Solution

The eBPF sk_lookup program allows custom logic for mapping incoming traffic to the appropriate listening socket.

It runs when the transport layer searches for a listening (TCP) or unconnected (UDP) socket, before traversal of the LHTABLE. This hook is triggered ONLY for listening sockets, while traffic to established (TCP) or connected (UDP) sockets bypasses it.

In fact, this is how Cloudflare's open-source project, Tubular , overcomes the described bottleneck and enables the serving of multiple services with overlapping ports on each of their edge servers.

Code Example

Diving into the Tubular code would be too much for this context, so I’ve put together a simple demo project.

I find code example renders in Substack tedious, so I’ll refer to my GitHub repository with the complete code demonstration.

I hope you find this resource as interesting as I do. Stay tuned for more exciting developments and updates in the world of eBPF in next week's newsletter.

Until then, keep 🐝-ing!

Warm regards, Teodor