eBPF Stateful Programs and State Synchronization Problem

eBPF Maps State Synchronization across Multiple Nodes

Like any other stateful application, eBPF programs can store their state in eBPF Maps. However, to avoid a single point of failure, these applications are often deployed across multiple nodes, in a so called high-availability setup.

But how do we ensure that decisions like IP blacklisting or DNS client rate-limiting remain consistent across all nodes when each node maintains its own eBPF Map to track client request counts?

In today’s newsletter, we’ll explore the problem of state synchronization of eBPF Maps across multiple nodes.

The Problem

Today, eBPF programs are widely used for stateful networking solutions such as:

Load Balancing: Storing information about backend servers, including IP addresses, ports, and health states, to choose one for forwarding incoming traffic.

Connection Tracking: Storing timestamps for connection activity. If a connection remains idle for too long, the eBPF program can clean up old entries, effectively implementing a garbage collection mechanism for expired sessions.

Firewalls: Storing state information about active connections, such as the number of packets or bytes associated with an IP address within a time window, to dynamically enforce rate limits or blacklist clients.

Unlike stateless applications, high-availability stateful applications often need to maintain consistent state information across all nodes in a cluster. In the scenario of eBPF application, the state of each node's eBPF Map must be synchronized across the cluster.

💡 eBPF maps are key-value data structures used to store and share data between eBPF programs and user-space applications or across different eBPF programs.

However, there is currently no known synchronization tool or daemon available for eBPF Maps.

The Solution

To address this, I decided to build a solution myself.

⚠️ Note: This is not a production-ready solution, but rather a proof of concept.

Here’s a high-level overview of the solution:

This approach leverages asynchronous eBPF map notification updates (in red 🔴). Whenever a change occurs in a specific eBPF map, the update is first forwarded to the user space of the host. From there, it's sent to all peers using the gRPC protocol (in blue 🔵), allowing them to synchronize accordingly.

🔴 Detecting eBPF Map Updates

To keep things simple, we focus on detecting and synchronizing updates for eBPF hash maps, though the concept would be similar for other eBPF map types.

Detection of updates is achieved by two eBPF programs:

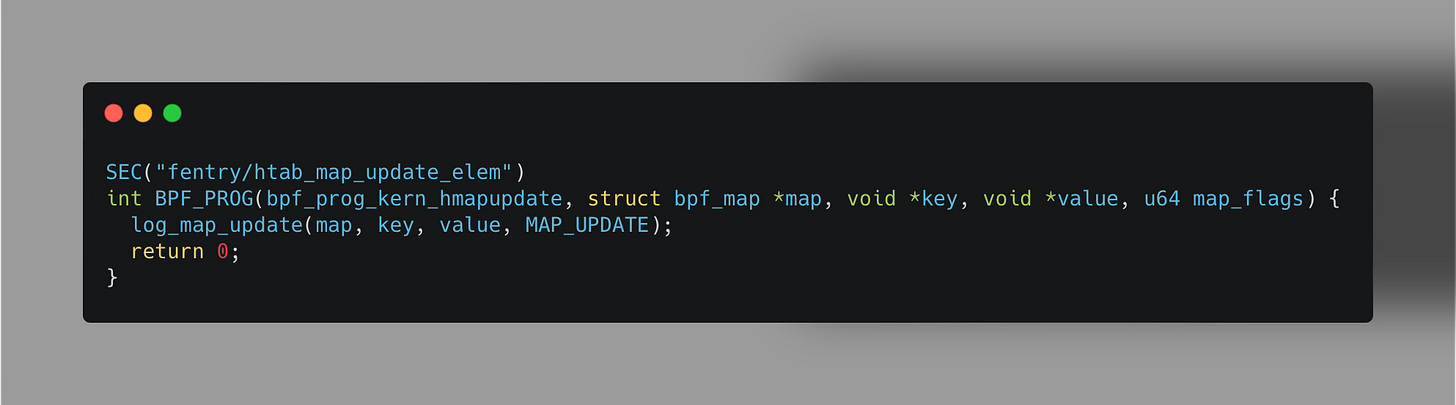

fentry/htab_map_update_elem: Triggered on element update in the Hash eBPF map.

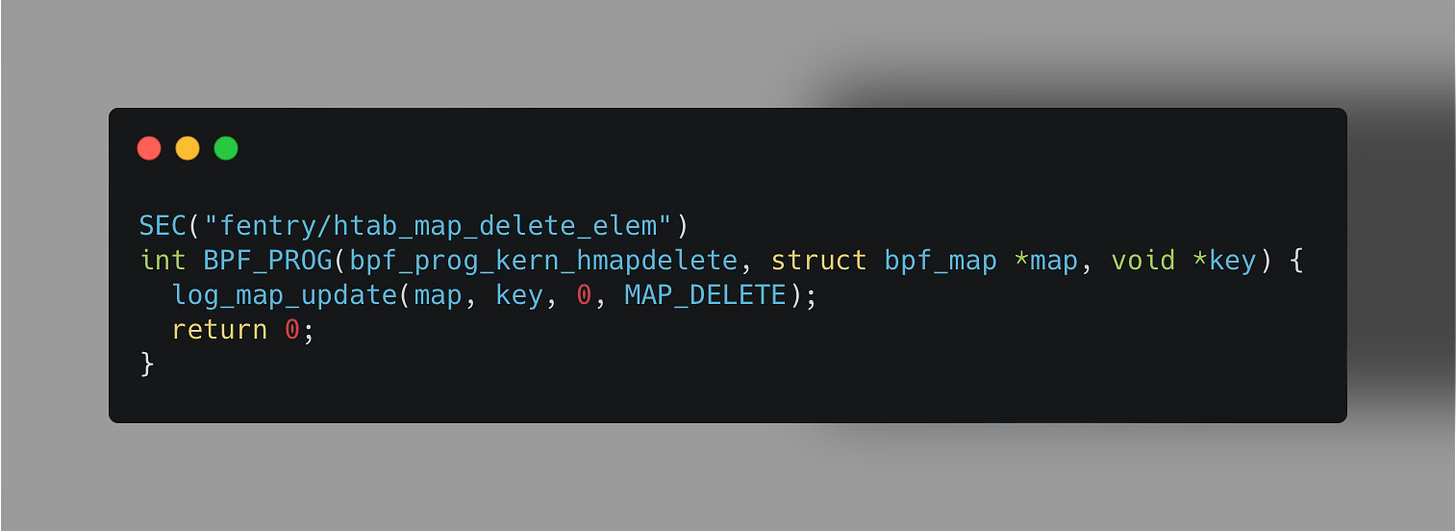

fentry/htab_map_delete_elem: Triggered on element deletion in the Hash eBPF map.

In the code, the log_map_update function is responsible for unpacking the function arguments, specifically the eBPF Map metadata (Map ID, name, etc.) along with the corresponding key-value pair update.

⚠️ Note: These hooks are triggered both if the eBPF hash map element is updated from user space, or from kernel space, through the invocation of eBPF helper functions in your kernel programs.

Inside the log_map_update function, an eBPF ring buffer is then utilized to forward these eBPF map update events to our user-space application.

⬇️ Complete code is available at the bottom.

🔵 State Change Broadcast

Once the eBPF map update events are received in user space, they are broadcast to other nodes in the cluster using gRPC (Remote Procedure Call).

While there’s no specific reason for choosing gRPC—other frameworks could also be used—this distinction is irrelevant for the proof of concept.

💡 One can optionally filter for specific eBPF maps or events to synchronize across the cluster, either in user space or kernel space.

In our setup, each node in the cluster functions as both a gRPC server and a client. To be more specific, it acts as:

Server, to receive and set updates from other nodes through the

SetValuegRPC call

Client, to send (sync) messages to the rest of the cluster:

This “dual role” ensures that all nodes remain synchronized with the latest changes to the eBPF maps.

Code Example

I find code example renders in Substack tedious, so I’ll refer to my GitHub repository with the code for all the loops we discussed.

Here’s the link.

⏪ Did you miss the previous issues? I'm sure you wouldn't, but JUST in case:

I hope you find this resource as enlightening as I did. Stay tuned for more exciting developments and updates in the world of eBPF in next week's newsletter.

Until then, keep 🐝-ing!

Warm regards, Teodor