eBPF and the Energy Footprint of Modern AI Infrastructure

As AI and cloud workloads grow, eBPF is powering a new wave of energy observability

At KubeCon + CloudNativeCon Europe in London last month, there was a lot of focus on OTLP, Kubernetes, and AI—but what really caught my attention was the growing emphasis on green computing.

With the rapid rise of AI adoption, there's a growing demand for data centers worldwide to expand their GPU infrastructure to support model training and inference workloads.

However, GPUs—especially during the training phase of large AI models—are notoriously resource-intensive. They consume vast amounts of electricity and generate significant heat, contributing to a substantial carbon footprint.

Just to put things in perspective:

Training GPT-3 used the same electricity as 130 U.S. homes annually

The International Energy Agency (IEA), in its global electricity forecast covering AI, data centers, and cryptocurrency, reported they accounted for nearly 2% of global consumption in 2022—and warned this could double by 2026, matching Japan’s total usage

A Google search uses 0.3 Wh, while a ChatGPT request uses 2.9 Wh. If ChatGPT powered all 9 billion daily searches, electricity use would rise by 10 TWh per year—enough to power 1.5 million EU residents

While GPUs aren’t the sole contributors to global warming, their energy usage—particularly in large-scale AI training—has a growing environmental impact.

So why should you care?

I believe we're nearing a point where optimizing services based on energy metrics is becoming a necessity—not only to reduce our carbon footprint, but also to mitigate the substantial costs likely to arise from emerging regulations.

For example, the EU’s Green Deal and Energy Efficiency Directive already requires data centers and cloud providers to report environmental impacts and cut greenhouse gases by 55% until 2030.

Similarly in the US, where the Energy Act of 2020 mandates regular efficiency audits of federal data centers.

Or in Germany, the Energy Efficiency Act (EnEfG) has a particular focus on data center efficiency and the use of renewable sources as well as targets for reducing consumption.

While efforts are underway to improve hardware efficiency and power data centers with green energy, many IT professionals believe it's only a matter of time before regulations require teams to run workloads more efficiently and account for the energy consumed by cloud-native applications.

But as an infrastructure engineer, where do you even start to address this concern?

You might have access to your machines' power usage in your on-prem cluster or a cloud provider’s energy dashboard, but that only gets you so far.

Ideally, you'd want visibility into how much energy each workload consumes—so you can optimize accordingly or relocate it to a more energy-efficient machine or cloud region.

But how do you get that level of insight?

Fortunately, some engineers at large enterprises have already faced this challenge.

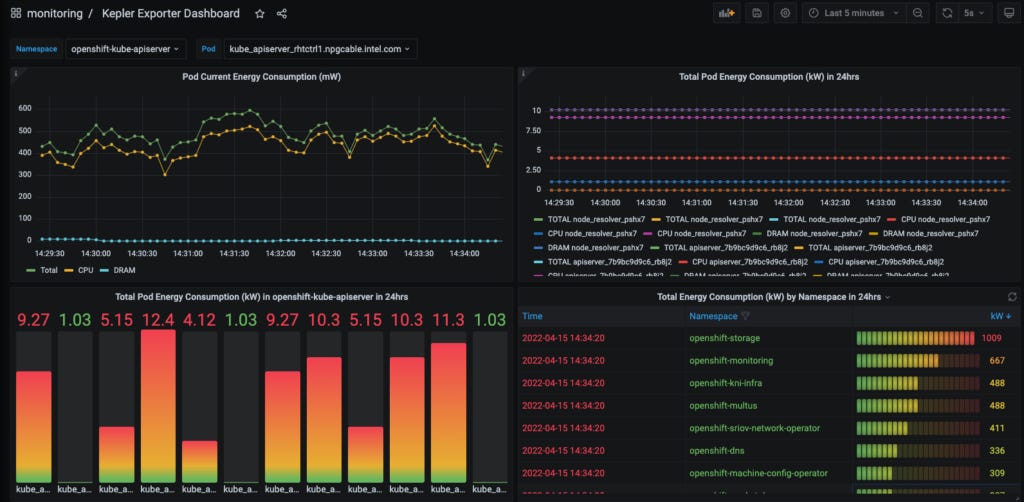

In particular, Red Hat (or IBM, if you prefer) has open-sourced and contributed a project to the CNCF called Kepler (Kubernetes-based Efficient Power Level Exporter).

Kepler supplies the raw data for carbon and energy reports of you cloud-native workloads.

Using it, one is able to break out energy consumption by process, container, pod or namespace which is also incredibly important for multi-tenant environments that must allocate emissions to projects or customers.

And it’s not just Kepler—there’s huge potential for a whole new class of products that could prove invaluable to companies in the near future.

Imagine Kubernetes orchestrators that can dynamically relocate microservices across nodes—or bring them closer together—to minimize energy usage, or that schedule energy-intensive workloads in greener cloud regions.

Regardless of the orchestration logic, one thing is clear: we need a new generation of energy observability tools. Tools that go beyond machine-level metrics and deliver fine-grained insights at the level of individual processes, containers, or pods.

Now, you might be thinking: why is a blog about eBPF talking about energy efficiency?

Well, because quite interestingly, not only eBPF application can consume less resources which adds up on a larger scale but also there’s an ongoing work on tools that aid in transparency of the energy consumption and eBPF plays a role in it.

In fact, Kepler itself uses eBPF to probe energy-related system stats and exports them as Prometheus metrics.

While Kepler isn't GPU-specific—and GPUs are notoriously high in energy consumption—consider tools like zymtrace, a distributed GPU Profiler that also leverages eBPF.

I think these tools will play an incredibly important role in your infrastructure, especially as regulations may push providers to pass on costs or enforce carbon-related pricing in the future.

I’d like to end this post with a quote:

Eventually the cost of intelligence, the cost of AI, will converge to the cost of energy. And the event and if it’ll be how much you can have the abundance of it will be limited by the abundance of energy.

Sam Altman, U.S. Senate hearing, 2025

⏪ Did you miss the previous issues? I'm sure you wouldn't, but JUST in case:

I hope you find this resource helpful. Keep an eye out for more updates and developments in eBPF in next week's newsletter.

Until then, keep 🐝-ing!

Warm regards, Teodor